从收到任务到完成任务大概用了两天半,期间出现好多问题,如:

1.爬到的信息格式不正确,不是字典形式;

2.爬出来的缺少信息;

3.爬出来的信息无法用中文显示;

4.爬出来的信息无法写进文件

.......

废话不多说,还是直接上代码吧.....不懂的自行百度~~~

# -*- coding:utf-8 -*-

import re

import urllib2

import json

import time

import sys

reload(sys)

sys.setdefaultencoding('utf8')

def get_dict():

data = []

f = open('xa35.json', 'a+')

for k in range(0, 4):

if k == 0:

url1 = 'http://comment.news.163.com/api/v1/products/a2869674571f77b5a0867c3d71db5856/threads/CHEN8TPF0001875P/comments/hotList?offset=0&limit=40&showLevelThreshold=72&headLimit=1&tailLimit=2&callback=getData&ibc=newspc'

user_agent = "Mozilla/5.0 (Windows NT 6.1; WOW64; rv:52.0) Gecko/20100101 Firefox/52.0"

headers = {'User-Agent': user_agent}

response = urllib2.Request(url1, headers=headers)

data = urllib2.urlopen(response).read()

else:

url2 = 'http://comment.news.163.com/api/v1/products/a2869674571f77b5a0867c3d71db5856/threads/CHEN8TPF0001875P/comments/newList?offset=%d&limit=30&showLevelThreshold=72&headLimit=1&tailLimit=2&callback=getData&ibc=newspc&_=1492685047382' % k

user_agent = "Mozilla/5.0 (Windows NT 6.1; Win64; x64; rv:49.0) Gecko/20100101 Firefox/49.0"

headers = {'User-Agent': user_agent}

response = urllib2.Request(url2, headers=headers)

data = urllib2.urlopen(response).read()

reg = re.compile(r'getData\(')

data1 = reg.sub('', data)

reg1 = re.compile(r"\)\;")

data = reg1.sub(' ', data1)

data = json.loads(data)

for i in data['comments']:

dict = {}

nc = data['comments'][i]['user'].setdefault('nickname', '""')

dict['nick'] = nc

pl = data['comments'][i]['content']

dict['comment'] = pl

dz = data['comments'][i]['user'].setdefault('location', '""')

dict['region'] = dz

userId = str(data['comments'][i]['user'].setdefault('userId', '""'))

dict['userid'] = userId

commentId = str(data['comments'][i]['commentId'])

dict['id'] = commentId

dict1 = json.dumps(dict,ensure_ascii=False)

print dict1

f.write(dict1+"\n")

f.close()

rs = get_dict()

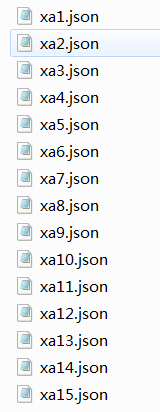

顺带附一张结果图:

转载自原文链接, 如需删除请联系管理员。

原文链接:python爬取有关熊安新区的网易评论,转载请注明来源!